Plant Smart Monitoring System

About the Client

A startup that monitors plants and uses AI for early diagnosis of plant diseases and timely notification about the problem.

Business Challenge

The client wants to automate the manual work of gardeners, who monitor indoor plants, namely hemp, using cameras and computer vision techniques. So, this solution was made for greenhouse management that connects hardware and ML to monitor the health of plants.

The client has addressed us with the following challenges:

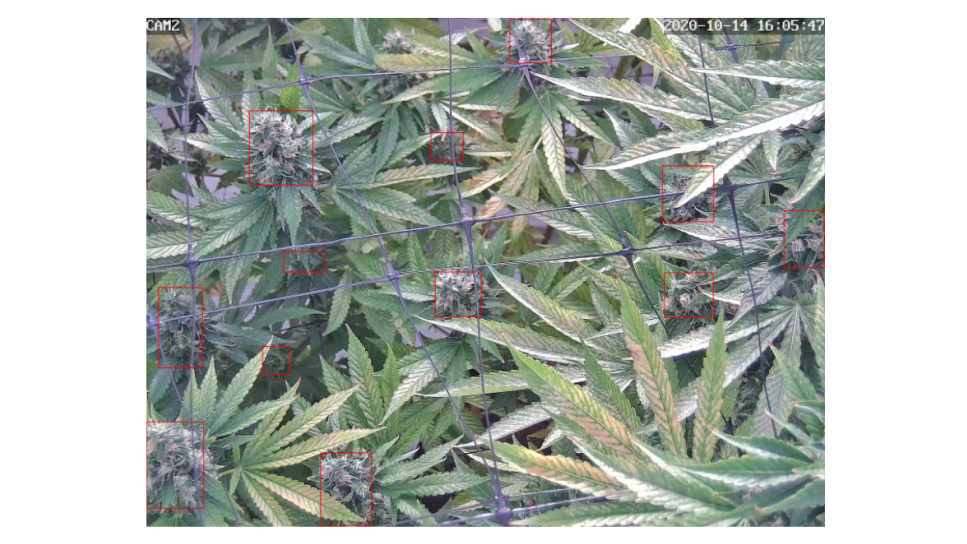

Hemp flowers detection: find hemp flowers on the photos of hemp plants on the indoor farm. This information can be used to distinguish between two phases of the hemp growth cycle and can provide the business with insights on when to start/stop harvesting.

Classification of healthy/sick hemp plants: detect signs of illness on the hemp plants, such as gray/yellow-ish leaves, rash/stains on the leaves, etc. This information would allow the business to automatically monitor the health state of the plants and quickly treat/remove the affected plants, thus saving around 25% of the crop.

Solution Overview

We came to the client at the stage of a ready-made startup, but their technological approach didn’t work as the business needed. Quantum has applied the latest computer vision techniques to build image processing solutions to tackle the above-mentioned challenges:

To solve the hemp flowers detection problem, images from cameras had to be collected and prepared for training. According to the client’s goal, we divided images into 3 types, depending on their growth stage. Then we trained several object detection models. As a result, we got around 80% of accuracy.

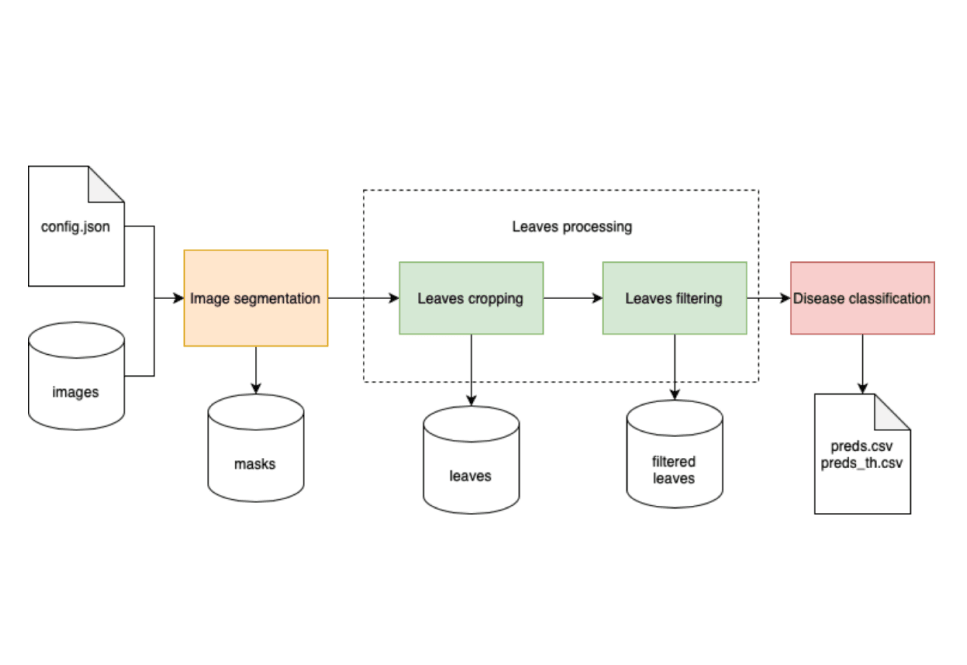

For the second problem we have created a pipeline with the next stages: image segmentation (to find leaves on the source camera shots), leaves processing (to retrieve and filter valuable information for each detected leaf), disease classification (to detect ill leaves which can have multiple symptoms). In the end, our model worked with 11 classes with a metric value of up to 90%.

Project Description

Hemp flowers detection

When the client came to us with the request he already had a Proof of Concept and demo client which had some ideas on how to improve the initial demo to meet their goals. To address this challenge, we have offered a Deep Learning approach – namely, the YOLO v4 neural network, which provides a great baseline for Object Detection tasks and was designed to be adapted to a variety of domains and applications.

Images from early stages were very important for us because it was crucial to distinguish the date when the hemp starts flowering. For this reason, we’ve paid extra attention to dataset preparation. Then we’ve trained several models with different configurations and input data. Firstly, we tried the default Darknet YOLOv4 model, which, unfortunately, couldn’t be trained due to the size of the input images – they were too large. Therefore, we decided to crop images (2560 × 1920) to different sizes, such as 853 × 960 (6 pieces) and 426 × 480 (24 pieces). Afterward, the model parameters were tuned to reach more accurate results.

Multilabel disease classification

To solve the given task we have divided it into substages with different approaches:

Firstly, we trained a custom deep learning model to detect leaves contours. We found public datasets and applied transfer learning on our data to reach more accurate results. It was really helpful because we had a limited number of annotated images from cameras. Then we applied various post-processing methods, such as watershed, quickshift, segments merging, and models ensemble, to improve model performance and get results as instance segmentation masks. On evaluation, our model got mAP@0.25 between 0.75 and 0.8.

Then each instance of the leaf had to be retrieved from the source image, cropped, and resized. The main issue at this stage was to filter irrelevant data from the previous stage, so we trained several models for binary classification to extract only leaves that are clearly seen. The images had too low resolution, so it was hard both for the labeling team to create good annotation and for the deep learning model to work with small features. Consequently, we found the optimal image size and applied appropriate preprocessing for extracted leaves. On the evaluation, our model showed an F1-score more than 0.9, which means almost all valuable information was redirected to the following stage.

For the final stage, we tested two main approaches for multilabel classification. We started from 1 vs All classification, where 11 binary classifiers were trained to predict probabilities for certain diseases. However, this strategy wasn’t successful because the training dataset was too imbalanced.

Then we tried a second approach and trained a single multilabel classifier with multiple outputs. We trained several deep learning models with different backbones, loss functions, and post-processings. So the final metrics of the model with all improvements were F1-sampled up to 0.9 and Hamming loss around 0.02, which was quite impressive.

Read our fool story in the recent article.

Let's discuss your idea!

Technological Details

For this project, we use a wide technological stack that includes Python, PyTorch, Docker-Nvidia, OpenCV, and Scikit-Image.